Neural Nets Classes and Basic Usage

Available astroNN Neural Net Classes

All astroNN Neural Nets are inherited from some child classes which inherited NeuralNetMaster, NeuralNetMaster also relies relies on two major component, Normalizer and GeneratorMaster

Normalizer (astroNN.nn.utilities.normalizer.Normalizer)

GeneratorMaster (astroNN.nn.utilities.generator.GeneratorMaster)

├── CNNDataGenerator

├── Bayesian_DataGenerator

└── CVAE_DataGenerator

NeuralNetMaster (astroNN.models.base_master_nn.NeuralNetMaster)

├── CNNBase

│ ├── ApogeeCNN

│ ├── StarNet2017

│ ├── ApogeeKplerEchelle

│ ├── SimplePloyNN

│ └── Cifar10CNN

├── BayesianCNNBase

│ ├── MNIST_BCNN # For authors testing only

│ ├── ApogeeBCNNCensored

│ └── ApogeeBCNN

├── ConvVAEBase

│ └── ApogeeCVAE # For authors testing only

└── CGANBase

└── GalaxyGAN2017 # For authors testing only

NeuralNetMaster Class API

All astroNN Neural Nets classes inherited from this astroNN.models.base_master_nn.NeuralNetMaster and thus methods

of this class is shared across all astroNN Neural Nets classes.

- class astroNN.models.base_master_nn.NeuralNetMaster[source]

Top-level class for an astroNN neural network

- Variables:

name – Full English name

_model_type – Type of model

_model_identifier – Unique model identifier, by default using class name as ID

_implementation_version – Version of the model

_python_info – Placeholder to store python version used for debugging purpose

_astronn_ver – astroNN version detected

_keras_ver – Keras version detected

_tf_ver – Tensorflow version detected

currentdir – Current directory of the terminal

folder_name – Folder name to be saved

fullfilepath – Full file path

batch_size – Batch size for training, by default 64

autosave – Boolean to flag whether autosave model or not

task – Task

lr – Learning rate

max_epochs – Maximum epochs

val_size – Validation set size in percentage

val_num – Validation set autual number

beta_1 – Exponential decay rate for the 1st moment estimates for optimization algorithm

beta_2 – Exponential decay rate for the 2nd moment estimates for optimization algorithm

optimizer_epsilon – A small constant for numerical stability for optimization algorithm

optimizer – Placeholder for optimizer

targetname – Full name for every output neurones

- History:

- 2017-Dec-23 - Written - Henry Leung (University of Toronto)2018-Jan-05 - Updated - Henry Leung (University of Toronto)

- flush()[source]

- Experimental, I don’t think it worksFlush GPU memory from tensorflow

- History:

2018-Jun-19 - Written - Henry Leung (University of Toronto)

- get_config()[source]

Get model configuration as a dictionary

- Returns:

dict

- History:

2018-May-23 - Written - Henry Leung (University of Toronto)

- get_weights()[source]

Get all model weights

- Returns:

weights arrays

- Return type:

ndarray

- History:

2018-May-23 - Written - Henry Leung (University of Toronto)

- property has_model

Get whether the instance has a model, usually a model is created after you called train(), the instance will has no model if you did not call train()

- Returns:

bool

- History:

2018-May-21 - Written - Henry Leung (University of Toronto)

- hessian(x=None, mean_output=False, mc_num=1, denormalize=False)[source]

- Calculate the hessian of output to inputPlease notice that the de-normalize (if True) assumes the output depends on the input data first orderlyin which the hessians does not depends on input scaling and only depends on output scalingThe hessians can be all zeros and the common cause is you did not use any activation oractivation that is still too linear in some sense like ReLU.

- Parameters:

- Returns:

An array of Hessian

- Return type:

ndarray

- History:

2018-Jun-14 - Written - Henry Leung (University of Toronto)

- property input_shape

Get input shape of the prediction model

- Returns:

input shape expectation

- Return type:

- History:

2018-May-21 - Written - Henry Leung (University of Toronto)

- jacobian(x=None, mean_output=False, mc_num=1, denormalize=False)[source]

- Calculate jacobian of gradient of output to input high performance calculation update on 15 April 2018Please notice that the de-normalize (if True) assumes the output depends on the input data first orderlyin which the equation is simply jacobian divided the input scaling, usually a good approx. if you use ReLU all the way

- Parameters:

- Returns:

An array of Jacobian

- Return type:

ndarray

- History:

- 2017-Nov-20 - Written - Henry Leung (University of Toronto)2018-Apr-15 - Updated - Henry Leung (University of Toronto)

- property output_shape

Get output shape of the prediction model

- Returns:

output shape expectation

- Return type:

- History:

2018-May-19 - Written - Henry Leung (University of Toronto)

- plot_dense_stats()[source]

Plot dense layers weight statistics

- Returns:

A plot

- History:

2018-May-12 - Written - Henry Leung (University of Toronto)

- plot_model(name='model.png', show_shapes=True, show_layer_names=True, rankdir='TB')[source]

Plot model architecture with pydot and graphviz

- Parameters:

- Returns:

No return but will save the model architecture as png to disk

- save(name=None, model_plot=False)[source]

Save the model to disk

- Parameters:

name (string or path) – Folder name/path to be saved

model_plot (boolean) – True to plot model too

- Returns:

A saved folder on disk

- summary()[source]

Get model summary

- Returns:

None, just print

- History:

2018-May-23 - Written - Henry Leung (University of Toronto)

- transfer_weights(model, exclusion_output=False)[source]

Transfer weight of a model to current model if possible # TODO: remove layers after successful transfer so wont mix up?

- Parameters:

model (astroNN.model.NeuralNetMaster or keras.models.Model) – astroNN model

exclusion_output (bool) – whether to exclude output in the transfer or not

- Returns:

bool

- History:

2022-Mar-06 - Written - Henry Leung (University of Toronto)

- property uses_learning_phase

To determine whether the model depends on keras learning flag. If False, then setting learning phase will not affect the model

- Returns:

the boolean to indicate keras learning flag dependence of the model

- Return type:

- History:

2018-Jun-03 - Written - Henry Leung (University of Toronto)

CNNBase

Documented Members:

astroNN.models.SimplePloyNN()

- class astroNN.models.base_cnn.CNNBase[source]

Top-level class for a convolutional neural network

- evaluate(input_data, labels)[source]

Evaluate neural network by provided input data and labels and get back a metrics score

- Parameters:

input_data (ndarray) – Data to be inferred with neural network

labels (ndarray) – labels

- Returns:

metrics score dictionary

- Return type:

- History:

2018-May-20 - Written - Henry Leung (University of Toronto)

- fit(input_data, labels, sample_weight=None)[source]

Train a Convolutional neural network

- Parameters:

input_data (ndarray) – Data to be trained with neural network

labels (ndarray) – Labels to be trained with neural network

sample_weight (Union([NoneType, ndarray])) – Sample weights (if any)

- Returns:

None

- Return type:

NoneType

- History:

2017-Dec-06 - Written - Henry Leung (University of Toronto)

- fit_on_batch(input_data, labels, sample_weight=None)[source]

Train a neural network by running a single gradient update on all of your data, suitable for fine-tuning

- Parameters:

input_data (ndarray) – Data to be trained with neural network

labels (ndarray) – Labels to be trained with neural network

sample_weight (Union([NoneType, ndarray])) – Sample weights (if any)

- Returns:

None

- Return type:

NoneType

- History:

2018-Aug-22 - Written - Henry Leung (University of Toronto)

BayesianCNNBase

Documented Members:

- class astroNN.models.base_bayesian_cnn.BayesianCNNBase[source]

Top-level class for a Bayesian convolutional neural network

- History:

2018-Jan-06 - Written - Henry Leung (University of Toronto)

- evaluate(input_data, labels, inputs_err=None, labels_err=None, batch_size=None)[source]

Evaluate neural network by provided input data and labels and get back a metrics score

- Parameters:

input_data (ndarray) – Data to be trained with neural network

labels (ndarray) – Labels to be trained with neural network

inputs_err (Union([NoneType, ndarray])) – Error for input_data (if any), same shape with input_data.

labels_err (Union([NoneType, ndarray])) – Labels error (if any)

- Returns:

metrics score dictionary

- Return type:

- History:

2018-May-20 - Written - Henry Leung (University of Toronto)

- fit(input_data, labels, inputs_err=None, labels_err=None, sample_weight=None, experimental=False)[source]

Train a Bayesian neural network

- Parameters:

input_data (ndarray) – Data to be trained with neural network

labels (ndarray) – Labels to be trained with neural network

inputs_err (Union([NoneType, ndarray])) – Error for input_data (if any), same shape with input_data.

labels_err (Union([NoneType, ndarray])) – Labels error (if any)

sample_weight (Union([NoneType, ndarray])) – Sample weights (if any)

- Returns:

None

- Return type:

NoneType

- History:

- 2018-Jan-06 - Written - Henry Leung (University of Toronto)2018-Apr-12 - Updated - Henry Leung (University of Toronto)

- fit_on_batch(input_data, labels, inputs_err=None, labels_err=None, sample_weight=None)[source]

Train a Bayesian neural network by running a single gradient update on all of your data, suitable for fine-tuning

- Parameters:

input_data (ndarray) – Data to be trained with neural network

labels (ndarray) – Labels to be trained with neural network

inputs_err (Union([NoneType, ndarray])) – Error for input_data (if any), same shape with input_data.

labels_err (Union([NoneType, ndarray])) – Labels error (if any)

sample_weight (Union([NoneType, ndarray])) – Sample weights (if any)

- Returns:

None

- Return type:

NoneType

- History:

- 2018-Aug-25 - Written - Henry Leung (University of Toronto)

- predict(input_data, inputs_err=None, batch_size=None)[source]

Test model, High performance version designed for fast variational inference on GPU

- Parameters:

input_data (ndarray) – Data to be inferred with neural network

inputs_err (Union([NoneType, ndarray])) – Error for input_data, same shape with input_data.

- Returns:

prediction and prediction uncertainty

- History:

- 2018-Jan-06 - Written - Henry Leung (University of Toronto)2018-Apr-12 - Updated - Henry Leung (University of Toronto)

ConvVAEBase

Documented Members:

- class astroNN.models.base_vae.ConvVAEBase[source]

Top-level class for a Convolutional Variational Autoencoder

- History:

2018-Jan-06 - Written - Henry Leung (University of Toronto)

- evaluate(input_data, labels)[source]

Evaluate neural network by provided input data and labels/reconstruction target to get back a metrics score

- Parameters:

input_data (ndarray) – Data to be inferred with neural network

labels (ndarray) – labels

- Returns:

metrics score

- Return type:

- History:

2018-May-20 - Written - Henry Leung (University of Toronto)

- fit(input_data, input_recon_target, sample_weight=None)[source]

Train a Convolutional Autoencoder

- Parameters:

input_data (ndarray) – Data to be trained with neural network

input_recon_target (ndarray) – Data to be reconstructed

sample_weight (Union([NoneType, ndarray])) – Sample weights (if any)

- Returns:

None

- Return type:

NoneType

- History:

2017-Dec-06 - Written - Henry Leung (University of Toronto)

- fit_on_batch(input_data, input_recon_target, sample_weight=None)[source]

Train a AutoEncoder by running a single gradient update on all of your data, suitable for fine-tuning

- Parameters:

input_data (ndarray) – Data to be trained with neural network

input_recon_target (ndarray) – Data to be reconstructed

sample_weight (Union([NoneType, ndarray])) – Sample weights (if any)

- Returns:

None

- Return type:

NoneType

- History:

2018-Aug-25 - Written - Henry Leung (University of Toronto)

- jacobian_latent(x=None, mean_output=False, mc_num=1, denormalize=False)[source]

- Calculate jacobian of gradient of latent space to input high performance calculation update on 15 April 2018Please notice that the de-normalize (if True) assumes the output depends on the input data first orderlyin which the equation is simply jacobian divided the input scaling, usually a good approx. if you use ReLU all the way

- Parameters:

- Returns:

An array of Jacobian

- Return type:

ndarray

- History:

- 2017-Nov-20 - Written - Henry Leung (University of Toronto)2018-Apr-15 - Updated - Henry Leung (University of Toronto)

- predict(input_data)[source]

Use the neural network to do inference and get reconstructed data

- Parameters:

input_data (ndarray) – Data to be inferred with neural network

- Returns:

reconstructed data

- Return type:

ndarry

- History:

2017-Dec-06 - Written - Henry Leung (University of Toronto)

- predict_decoder(z)[source]

Use the decoder to get the hidden layer encoding/representation

- Parameters:

z (ndarray) – Latent space vectors

- Returns:

output reconstruction

- Return type:

ndarray

- History:

2022-Dec-08 - Written - Henry Leung (University of Toronto)

- predict_encoder(input_data)[source]

Use the encoder to get the hidden layer encoding/representation

- Parameters:

input_data (ndarray) – Data to be inferred with neural network

- Returns:

hidden layer encoding/representation mean and std

- Return type:

ndarray

- History:

2017-Dec-06 - Written - Henry Leung (University of Toronto)

Workflow of Setting up astroNN Neural Nets Instances and Training

astroNN contains some predefined neural networks which work well in certain aspect. For most general usage, I recommend you to create your own neural network for more flexibility and take advantage of astroNN custom loss function or layers.

For predefined neural network, generally you have to setup an instances of astroNN Neural Nets class with some predefined architecture. For example,

1# import the neural net class from astroNN first

2from astroNN.models import ApogeeCNN

3

4# astronn_neuralnet is an astroNN's neural network instance

5# In this case, it is an instance of ApogeeCNN

6astronn_neuralnet = ApogeeCNN()

Lets say you have your training data prepared, you should specify what the neural network is outputing by setting up the targetname

1# Just an example, if the training data is Teff, logg, Fe and absmag

2astronn_neuralnet.targetname = ['teff', 'logg', 'Fe', 'absmag']

By default, astroNN will generate folder name automatically with naming scheme astroNN_[month][day]_run[run number].

But you can specify custom name by

1# astronn_neuralnet is an astroNN's neural network instance

2astronn_neuralnet.folder_name = 'some_custom_name'

You can enable autosave (save all stuffs immediately after training or save it yourself by

1# To enable autosave

2astronn_neuralnet.autosave = True

3

4# To save all the stuffs, model_plot=True to plot models too, otherwise wont plot, needs pydot_ng and graphviz

5astronn_neuralnet.save(model_plot=False)

astroNN will normalize your data after you called train() method. The advantage of it is if you are using normalization provided by astroNN, you can make sure when test() method is called, the testing data will be normalized and prediction will be denormalized in the exact same way as training data. This can minimize human error.

If you want to normalize by yourself, you can disable it by

1# astronn_neuralnet is an astroNN's neural network instance

2astronn_neuralnet.input_norm_mode=0

3astronn_neuralnet.labels_norm_mode = 0

You can add a list of Keras/astroNN callback by

1astronn_neuralnet.callbacks = [# some callback(s) here)]

So now everything is set up for training

1# Start the training

2astronn_neuralnet.train(x_train,y_train)

If you did not enable autosave, you can save it after training by

1# To save all the stuffs, model_plot=True to plot models too, otherwise wont plot, needs pydot_ng and graphviz

2astronn_neuralnet.save(model_plot=False)

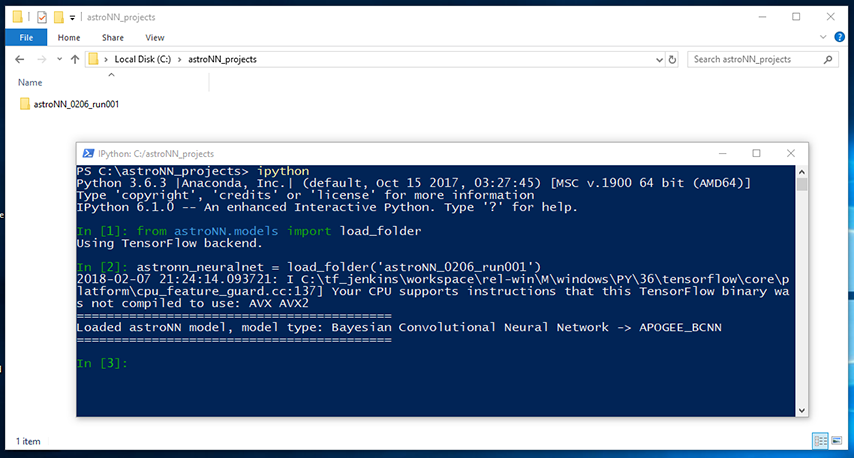

Load astroNN Generated Folders

First way to load a astroNN generated folder, you can use the following code. You need to replace astroNN_0101_run001

with the folder name. should be something like astroNN_[month][day]_run[run number]

- astroNN.models.load_folder(folder=None)[source]

To load astroNN model object from folder

- Parameters:

folder (str) – [optional] you should provide folder name if outside folder, do not specific when you are inside the folder

- Returns:

astroNN Neural Network instance

- Return type:

astroNN.nn.NeuralNetMaster.NeuralNetMaster

- History:

2017-Dec-29 - Written - Henry Leung (University of Toronto)

1from astroNN.models import load_folder

2astronn_neuralnet = load_folder('astroNN_0101_run001')

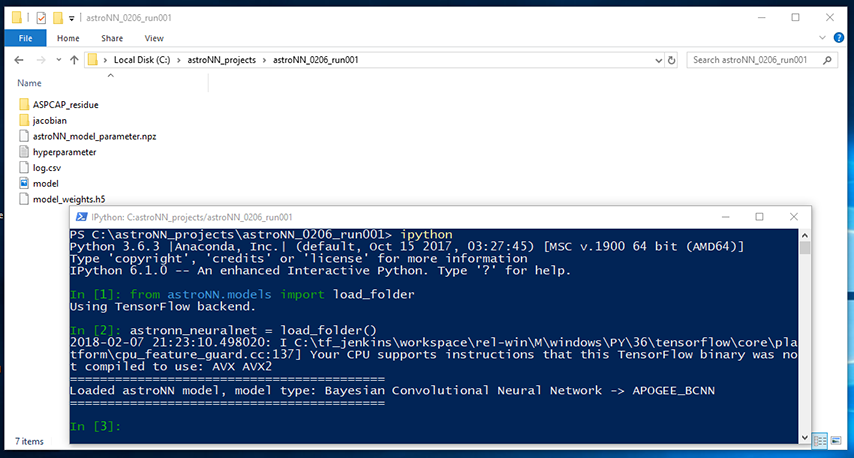

OR second way to open astroNN generated folders is to open the folder and run command line window inside there, or switch directory of your command line window inside the folder and run

1from astroNN.models import load_folder

2astronn_neuralnet = load_folder()

astronn_neuralnet will be an astroNN neural network object in this case. It depends on the neural network type which astroNN will detect it automatically, you can access to some methods like doing inference or continue the training (fine-tuning). You should refer to the tutorial for each type of neural network for more detail.

There is a few parameters from keras_model you can always access,

1# The model summary from Keras

2astronn_neuralnet.keras_model.summary()

3

4# The model input

5astronn_neuralnet.keras_model.input

6

7# The model input shape expectation

8astronn_neuralnet.keras_model.input_shape

9

10# The model output

11astronn_neuralnet.keras_model.output

12

13# The model output shape expectation

14astronn_neuralnet.keras_model.output_shape

astroNN neuralnet object also carries targetname (hopefully correctly set by the writer of neural net), parameters used to normalize the training data (The normalization of training and testing data must be the same)

1# The tragetname corresponding to output neurone

2astronn_neuralnet.targetname

3

4# The model input

5astronn_neuralnet.keras_model.input

6

7# The mean used to normalized training data

8astronn_neuralnet.input_mean_norm

9

10# The standard derivation used to normalized training data

11astronn_neuralnet.input_std_norm

12

13# The mean used to normalized training labels

14astronn_neuralnet.labels_mean_norm

15

16# The standard derivation used to normalized training labels

17astronn_neuralnet.labels_std_norm

Load and Use Multiple astroNN Generated Folders

Note

astroNN fully supports eager execution now and you no longer need to context manage graph and session in order to use multiple model at the same time

It is tricky to load and use multiple models at once since keras share a global session by default if no default tensorflow session provided and astroNN might encounter namespaces/scopes collision. So astroNN assign seperate Graph and Session for each astroNN neural network model. You can do:

1from astroNN.models import load_folder

2

3astronn_model_1 = load_folder("astronn_model_1")

4astronn_model_2 = load_folder("astronn_model_2")

5astronn_model_3 = load_folder("astronn_model_3")

6

7with astronn_model_1.graph.as_default():

8 with astronn_model_1.session.as_default():

9 # do stuff with astronn_model_1 here

10

11with astronn_model_2.graph.as_default():

12 with astronn_model_2.session.as_default():

13 # do stuff with astronn_model_2 here

14

15with astronn_model_3.graph.as_default():

16 with astronn_model_3.session.as_default():

17 # do stuff with astronn_model_3 here

18

19# For example do things with astronn_model_1 again

20with astronn_model_1.graph.as_default():

21 with astronn_model_1.session.as_default():

22 # do more stuff with astronn_model_1 here

Workflow of Testing and Distributing astroNN Models

The first step of the workflow should be loading an astroNN folder as described above.

Lets say you have loaded the folder and have some testing data, you just need to provide the testing data without any normalization if you used astroNN normalization during training. The testing data will be normalized and prediction will be denormalized in the exact same way as training data.

1# Run forward pass for the test data throught the neural net to get prediction

2# The prediction should be denormalized if you use astroNN normalization during training

3prediction = astronn_neuralnet.test(x_test)

You can always train on new data based on existing weights

1# Start the training on existing models (fine-tuning), astronn_neuralnet is a trained astroNN models

2astronn_neuralnet.train(x_train,y_train)

Creating Your Own Model with astroNN Neural Net Classes

You can create your own neural network model inherits from astroNN Neural Network class to take advantage of the existing code in this package. Here we will go thought how to create a simple model to do classification with MNIST dataset with one convolutional layer and one fully connected layer neural network.

Lets create a python script named custom_models.py under an arbitrary folder, lets say ~/ which is your home folder,

add ~/custom_models.py to astroNN configuration file.

1# import everything we need

2from tensorflow import keras

3# this is the astroNN neural net abstract class we will going to inherit from

4from astroNN.models.CNNBase import CNNBase

5

6regularizers = keras.regularizers

7MaxPooling2D, Conv2D, Dense, Flatten, Activation, Input = keras.layers.MaxPooling2D, keras.layers.Conv2D, \

8 keras.layers.Dense, keras.layers.Flatten, \

9 keras.layers.Activation, keras.layers.Input

10

11# now we are creating a custom model based on astroNN neural net abstract class

12class my_custom_model(CNNBase):

13 def __init__(self, lr=0.005):

14 # standard super for inheriting abstract class

15 super().__init__()

16

17 # some default hyperparameters

18 self._implementation_version = '1.0'

19 self.initializer = 'he_normal'

20 self.activation = 'relu'

21 self.num_filters = [8]

22 self.filter_len = (3, 3)

23 self.pool_length = (4, 4)

24 self.num_hidden = [128]

25 self.max_epochs = 1

26 self.lr = lr

27 self.reduce_lr_epsilon = 0.00005

28

29 self.task = 'classification'

30 # you should set the targetname some that you know what those output neurones are representing

31 # in this case the outpu the neurones are simply representing digits

32 self.targetname = ['Zero', 'One', 'Two', 'Three', 'Four', 'Five', 'Six', 'Seven', 'Eight', 'Nine']

33

34 # set default input norm mode to 255 to normalize images correctly

35 self.input_norm_mode = 255

36 # set default labels norm mode to 0 (equivalent to do nothing) to normalize labels correctly

37 self.labels_norm_mode = 0

38

39 def model(self):

40 input_tensor = Input(shape=self._input_shape, name='input')

41 cnn_layer_1 = Conv2D(kernel_initializer=self.initializer, padding="same", filters=self.num_filters[0],

42 kernel_size=self.filter_len)(input_tensor)

43 activation_1 = Activation(activation=self.activation)(cnn_layer_1)

44 maxpool_1 = MaxPooling2D(pool_size=self.pool_length)(activation_1)

45 flattener = Flatten()(maxpool_1)

46 layer_2 = Dense(units=self.num_hidden[0], kernel_initializer=self.initializer)(flattener)

47 activation_2 = Activation(activation=self.activation)(layer_2)

48 layer_3 = Dense(units=self.labels_shape, kernel_initializer=self.initializer)(activation_2)

49 output = Activation(activation=self._last_layer_activation, name='output')(layer_3)

50

51 model = Model(inputs=input_tensor, outputs=output)

52

53 return model

Save the file and we can open python under the same location as the python script

1# import everything we need

2from custom_models import my_custom_model

3from keras.datasets import mnist

4from keras import utils

5

6# load MNIST

7(x_train, y_train), (x_test, y_test) = mnist.load_data()

8# convert to approach type

9x_train = x_train.astype('float32')

10x_test = x_test.astype('float32')

11y_train = utils.to_categorical(y_train, 10)

12

13# create a neural network instance

14net = my_custom_model()

15

16# train

17net.train(x_train, y_train)

18

19# save the model after training

20net.save("trained_models_folder")

If you want to share the trained models, you have to copy custom_models.py to the inside of the folder so that

astroNN can load it successfully on other computers.

The second way is you send the file which is custom_models.py to the target computer and install the file by adding

the file to config.ini on the target computer.

You can simply load the folder on other computers by running python inside the folder and run

1# import everything we need

2from astroNN.models import load_folder

3

4net = load_folder()

OR outside the folder trained_models_folder

1# import everything we need

2from astroNN.models import load_folder

3

4net = load_folder("trained_models_folder")

NeuralNetMaster Class

NeuralNetMaster is the top level abstract class for all astroNN sub neural network classes. NeuralNetMaster define the structure of how an astroNN neural network class should look like.

NeuralNetMaster consists of a pre-training checking (check input and labels shape, cpu/gpu check and create astroNN folder for every run.